To Congress: It’s critical to center impacts of AI tech in ongoing conversations on AI regulation

The release of generative AI, like ChatGPT and Bard, into the public sphere fueled an already massive AI buzz over the last year. It also spurred wide discussion, concern, and attention to how artificial intelligence can also have the potential for harm—and there’s not much regulation in place to mitigate these harms

We joined a coalition of civil society and advocacy groups, including the Brennan Center for Justice at NYU School of Law, the Center for Democracy and Technology, the Center on Race and Digital Justice, and 83 other public interest groups urging lawmakers to center “the well-being and rights of the American people” as it considers risks and opportunities of AI technology. The letter specifically called out the following harms:

- Screening tools used by companies to streamline hiring, have created barriers to employment for people with disabilities, women, older people, and people of color.

- Inaccuracies or poor design in AI systems can obstruct people’s access to sorely needed public benefits.

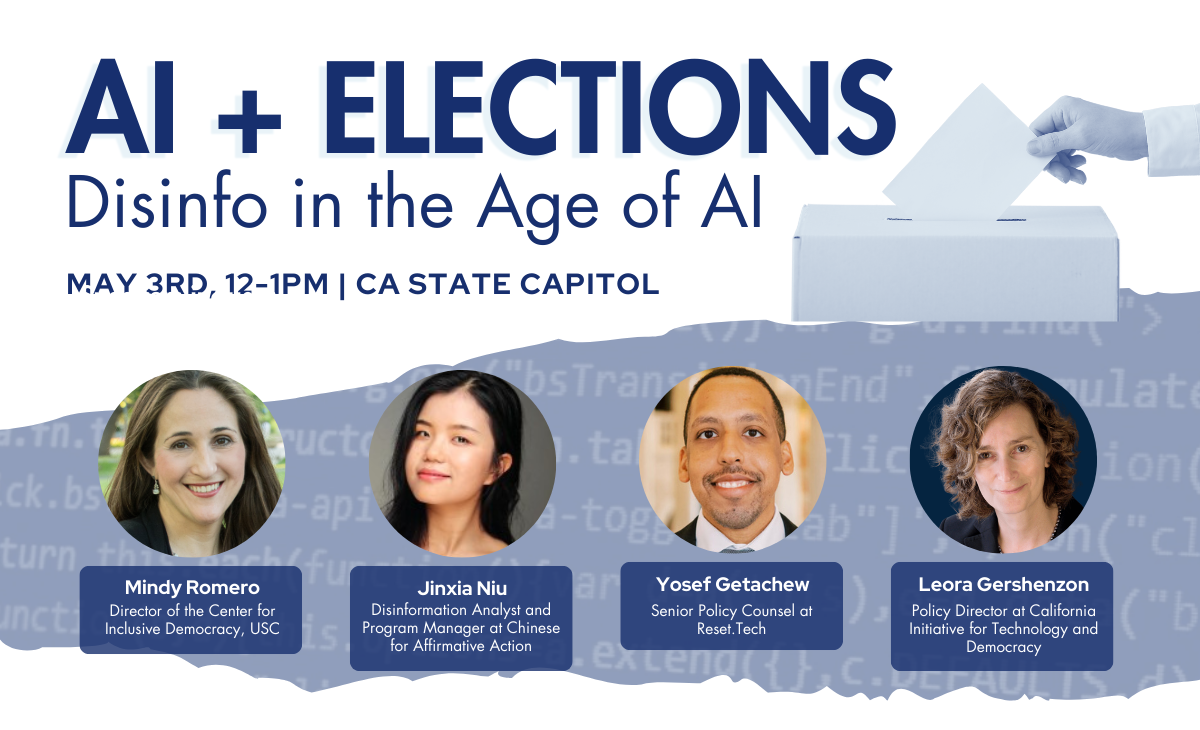

- The easy creation of manipulated video and audio is fueling consumer fraud and extortion schemes and raises critical questions for the election-related information environment and public discourse.

- AI used in high-stakes decisions by law enforcement, immigration, and national security agencies can trample people’s civil rights and civil liberties: Americans have been arrested and incarcerated because police facial recognition systems made a wrong match, the overwhelming majority of them Black Americans.

- Generative AI tools can supplant the demand for creative work, threatening creators’ livelihoods.

- The enormous energy and water requirements associated with large language models threaten efforts to combat climate change, while the unchecked use of Americans’ information to build these models threatens our privacy and the freedoms of speech and association.

You can read the full letter here:

Show Your Support

Do you believe that we should enact responsible guardrails for AI? Show your support by sharing this on social media! Use the social share buttons on the left-hand side of this page to quickly signal boost.