How do we create a people-first approach to AI policy?

Generative AI has captured the attention of people around the world. ChatGPT and other large language models excited millions of people—and created a sense of fear for others. But in some critical aspects of our lives, AI has already arrived, impacting everyone.

Automated decision-making systems, predictive algorithms, and many other emergent technologies are determining who can live where, whether we have stable employment, who interacts with law enforcement, and whether people can gain access to credit and other financial resources.

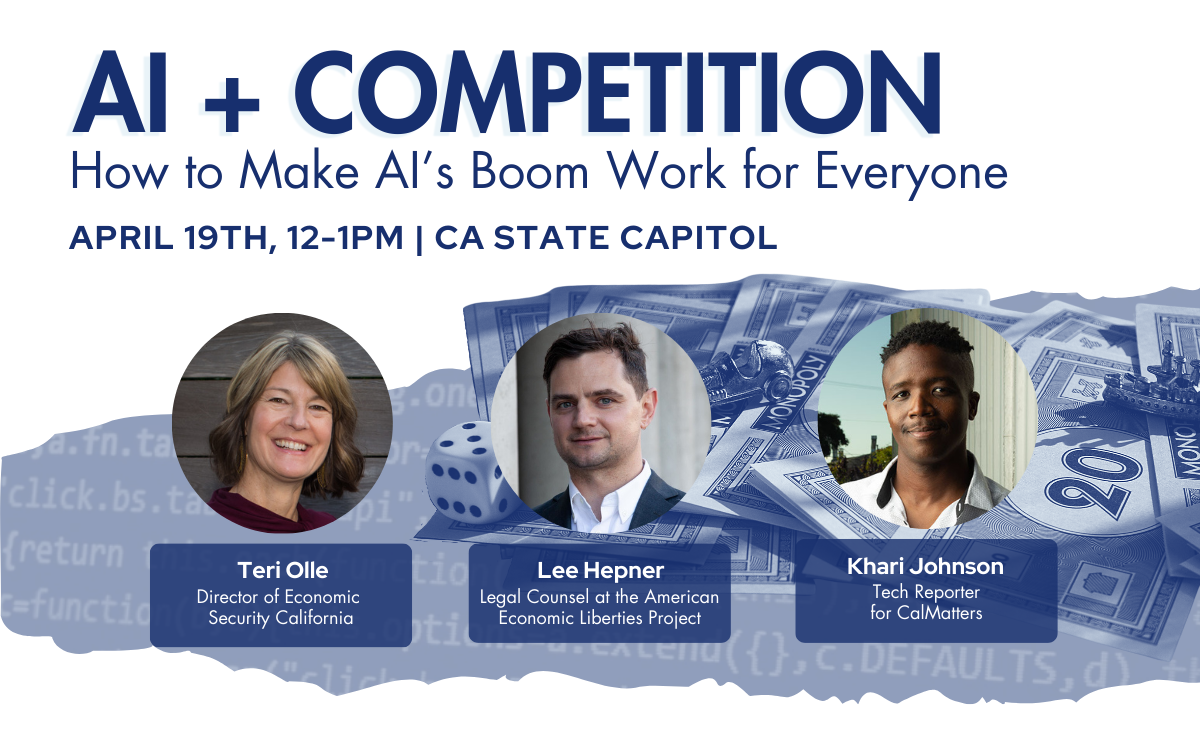

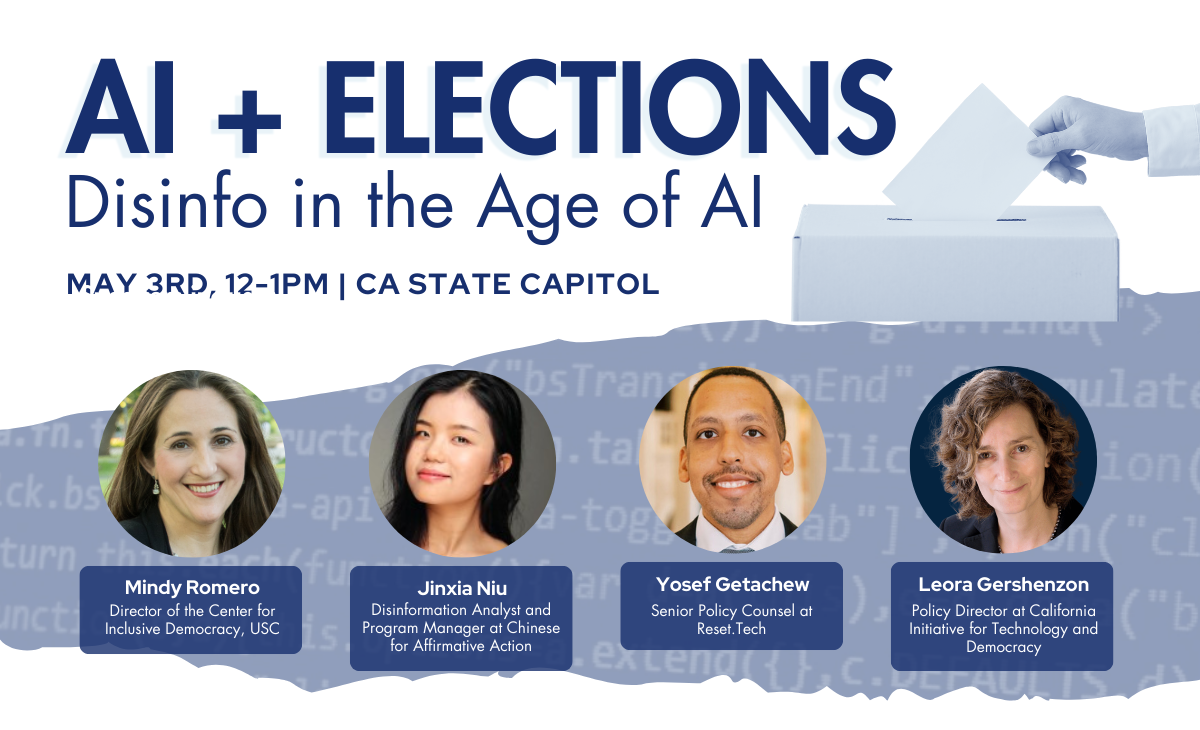

That’s why we headed to Sacramento to discuss a people-first policy approach to AI and equity with experts and advocates. These are our major takeaways.

AI and other emerging technologies are everywhere, touching every part of our lives

So what are some ways that experts and advocates see AI popping up in everyday life?

Jake Snow, Technology and Civil Liberties Attorney at the ACLU of Northern California highlighted the criminal legal system’s use of AI, specifically AI-backed facial recognition software. The Gender Shades Project demonstrated that this software is less accurate when looking at the faces of women and people of color. This can have devastating effects on marginalized people when used for police investigations.

Workers across industries and professions are also being negatively impacted by AI—particularly with mass layoffs and management technology. But as Legislative and Strategic Campaigns Director of the California Labor Federation Sara Flocks noted, companies and industrialists using technology to advance their means is not new. They’ll do so even if it’s at the price of worker well-being.

The term Luddite actually comes from the Luddites (journalist Kim Kelly talked about them in her book on the history of labor organizing), workers who fought back against manufacturers who used technology to replace skilled workers and drive down wages. Sounds familiar? In modern-day, workers are even paid low wages to train the technology that will be used to replace them.

Even if AI doesn’t impact your workplace and you never have an encounter with the criminal legal system, as Hayley Tsukayama, Associate Director of Legislative Activism at the Electronic Frontier Foundation (EFF) brought up, all of our data is being collected and sold to train AI. And those AI-backed systems are being used to shape our world.

We need guardrails to ensure that AI tools don’t exacerbate harm

Even though AI is increasingly part of our everyday lives, there has been some pushback against creating legislation to protect people from the harms of AI and other emerging technologies. There’s this narrative that AI and AI-backed technology are developing and deploying too rapidly to create policies around them.

Who benefits from this idea, though? It’s the people who have power. This is one of the reasons why WGA and SAG-AFTRA put technology at the center of their contract negotiations in 2023, and why they had to go on strike in the first place.

Unfortunately, not everyone has a union to negotiate for them, and nobody does in every part of their lives. That’s why we have civil rights laws—which also apply to new technology, as Snow pointed out. While applying these laws to algorithmic tools might feel unfamiliar, that’s exactly how the legal system should work. Look no further than California’s constitution, which contains a 50-year-old right to privacy that protects us to this day.

Of course, we still need updates and new laws to keep up. This is what Tsukayama works on at the EFF, specifically with data autonomy. It’s hard to imagine effectively creating data autonomy laws when the collection and sale of our data is only accelerating—but that’s exactly why it’s more important than ever.

We need to center the people impacted by these technologies when developing them and creating regulation

While we’re creating regulations and developing new technologies, we need to keep people at the center. AI is touching all aspects of daily life—without proper intervention, millions of people will pay the price.

So how do you create a people-first approach to technology? You start with listening to people’s stories and crafting policy interventions around their needs. Check out our AI Policy Principles, which include ensuring that people impacted by AI have the agency to shape technology.

Watch the full event on-demand to learn more.