Elections in the Age of AI

The United States is now entering its first-ever generative AI election.

AI deepfakes will inundate our political discourse and voters may not know what images, audio, or video they can trust. Powerful, easy-to-access new tools will be available to candidates, conspiracy theorists, foreign states, and online trolls who want to deceive voters and undermine trust in our elections.

These threats are not theoretical. We are already seeing elections impacted by generative AI deepfakes and disinformation in Bangladesh, Slovakia, and closer to home, in Chicago and the Republican presidential primary.

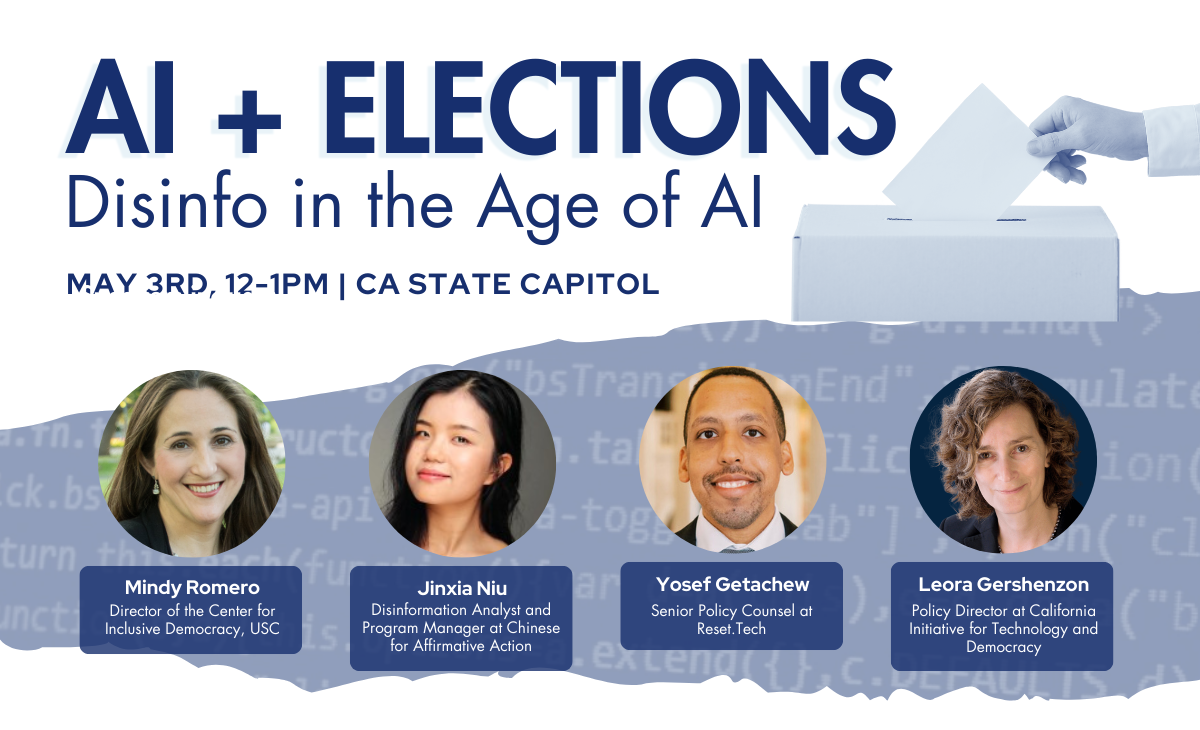

That’s why on May 3rd we went to Sacramento to talk to experts and advocates about how to craft policy and establish the guardrails needed to ensure that AI can be supportive of, not detrimental to, democracy around the world. These were our major takeaways.

Want a primer on AI? Check out our What Exactly Is AI? blog to get the 101.

Disinformation erodes the ability of democracies to function

Everyone participates in information sharing. People also need to be able to express their political opinions—especially in order for democracy to function. Mindy Romero, Director of the Center for Inclusive Democracy at USC, noted that these are especially important avenues of participation for non-citizens, only some of whom get to vote in local elections in the US.

People have also weaponized the need for people to be aware in order to maintain a healthy democracy. Black people during the Jim Crow era, for instance, were commonly given impossible literacy tests to determine whether or not they could vote.

Disinformation serves as another means of disenfranchizing voters. Both then and today, disinformation can be used by foreign governments to interfere with our elections. It also heightens distrust and spurs political violence against marginalized groups and election officials. And, as Jinxia Niu, Disinformation Analyst and Program Manager at Chinese for Affirmative Action, talked about, disinformation serves to control how marginalized voters vote—or even discourage them from voting.

Non-English-speaking groups are especially targeted. Niu specifically works on a project combating disinformation targeting Chinese-language speakers.

All of these together restrict the ability to have a system of governance that represents the people it governs.

Disinformation is nothing new—generative AI, though, ups the ante

To back it up a couple hundred years, Leora Gershenzon, Policy Director at California Initiative for Technology and Democracy (CITED), noted that we can see examples of disinformation that spark doubt around democracy from George Washington’s time.

So what’s new? Disinformation is now being turbocharged by generative AI, as Yosef Getachew, Senior Policy Counsel at Reset.Tech, asserted.

Generative AI works through a process called machine learning in which data is fed to a set of algorithms that then establish data points, sometimes with the support of a “trainer.” With generative AI tools, another set of algorithms takes these data points and creates something new.

Through training, generative AI could, for example, learn what President Joe Biden looks like and sounds like. It could then be prompted—the prompt being another set of data—to create a video of Joe Biden in which he tells people to not vote.

Generative AI can also be used to create thousands of very real-seeming fake social media accounts to share the video, spreading it across the Internet in seconds and also lending some legitimacy to its lies. Because if so many people believe something, it must be true.

Niu shared how fact-checking the products of generative AI is increasingly difficult—both because of the speed at which it works and the speed at which generative AI is getting more sophisticated.

So what do we do about the AI of it all?

Simultaneously, fact-checkers are using AI to take on disinformation and generative AI. Language acts as a barrier with the tools, though, as disinformation detection software and generative AI are mostly trained in English. Organizations like Chinese for Affirmative Action are working hard to improve access by creating tools trained in other languages like Mandarin.

As for the government, states like Washington are passing legislation to require political ads and generative AI to be labeled as such. Texas passed a law criminalizing the use of deepfakes of candidates—however, it’s hard to know how practically can be enforced due to Section 230. There are currently dozens of laws around AI generally moving through the California legislature.

All of the speakers agreed that research is desperately needed to understand what’s at work here and create meaningful ways to address AI-fueled election disinformation.

Watch the full AI + Elections event recording to learn more: